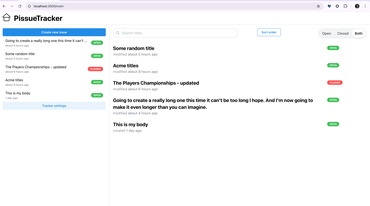

Last week I started a side project called PissueTracker which is a web app that lets you create issues/bugs. What's cool about it is that it uses an offline database, namely IndexedDB in the browser. That means that every time you create or edit an issue, it's stored in a database in your browser. The problem with this is that inevitably, you might reset your entire browser. Or, when auth is working, you might want to access your data from an entirely different browser. So I'm adding a client-to-server sync. (It'll become two-way sync later)

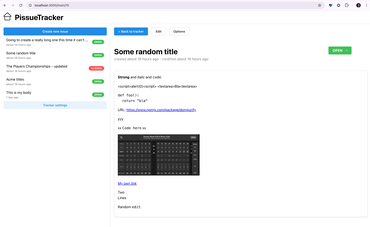

How it works is demonstrated with this code:

db.issues

.update(issue.id, { state: newState, syncedAt: null })

.then((updated) => {

if (updated) {

notifications.show(...);

mutate(issue.id);

The payload sent to the .update() is first the ID, and then it's an object. No matter what the payload changes are, state: newState in this case, there's always syncedAt: null tacked on.

Then, after it has successfully updated the local database, it triggers that mutate function. Let's dig into that next.

The mutate function is based on the useMutation hook in TanStack Query which is a wrapper for making a fetch XHR POST request. It looks like this:

const issue = await db.issues.get({ id });

const response = await upfetch(`/api/sync/issue/${tracker.uuid}`, {

method: "POST",

body: { id, issue },

});

if (response.ok) {

db.issues.update(id, { syncedAt: new Date() });

return response.json();

}

throw new Error("Failed to sync issue");

Get it?

First, we set syncedAt: null into the local browser database.

Then, we send the issue object to the server. Once that's done, we update the browser database, again, but this time set syncedAt to a valid Date.

Once all of this is done, you have a copy of the same issue payload in the server and in the client. The only thing that is different is that the syncedAt is null on the server.

The server is an Hono server that uses PostgreSQL. All records are stored as JSONB in PostgreSQL. That means we don't need to update the columns in PostgreSQL if the columns change in the Dexie.js definition.

UPDATE

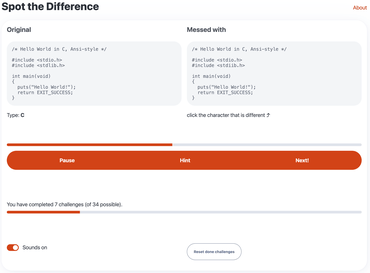

This idea is flawed. The problem is the use of incrementing integer IDs.

Suppose you have 2 distinct clients. I.e. two different people. One on a train (in a tunnel) and one on an airplane. If they both create a new row, they'd be sending:

id: 2 title: "Trains are noisy" body: ...

and

id: 2 title: "Airplanes are loud" body: ...

That would override each other's data when synchronized with the central server, later.

The only solution is UUIDs.